Abstract

Species knowledge is essential for protecting biodiversity. The identification of plants by conventional keys is complex, time consuming, and due to the use of specific botanical terms frustrating for non-experts. This creates a hard to overcome hurdle for novices interested in acquiring species knowledge. Today, there is an increasing interest in automating the process of species identification. The availability and ubiquity of relevant technologies, such as, digital cameras and mobile devices, the remote access to databases, new techniques in image processing and pattern recognition let the idea of automated species identification become reality. This paper is the first systematic literature review with the aim of a thorough analysis and comparison of primary studies on computer vision approaches for plant species identification. We identified 120 peer-reviewed studies, selected through a multi-stage process, published in the last 10 years (2005–2015). After a careful analysis of these studies, we describe the applied methods categorized according to the studied plant organ, and the studied features, i.e., shape, texture, color, margin, and vein structure. Furthermore, we compare methods based on classification accuracy achieved on publicly available datasets. Our results are relevant to researches in ecology as well as computer vision for their ongoing research. The systematic and concise overview will also be helpful for beginners in those research fields, as they can use the comparable analyses of applied methods as a guide in this complex activity.

Similar content being viewed by others

1 Introduction

Biodiversity is declining steadily throughout the world [113]. The current rate of extinction is largely the result of direct and indirect human activities [95]. Building accurate knowledge of the identity and the geographic distribution of plants is essential for future biodiversity conservation [69]. Therefore, rapid and accurate plant identification is essential for effective study and management of biodiversity.

In a manual identification process, botanist use different plant characteristics as identification keys, which are examined sequentially and adaptively to identify plant species. In essence, a user of an identification key is answering a series of questions about one or more attributes of an unknown plant (e.g., shape, color, number of petals, existence of thorns or hairs) continuously focusing on the most discriminating characteristics and narrowing down the set of candidate species. This series of answered questions leads eventually to the desired species. However, the determination of plant species from field observation requires a substantial botanical expertise, which puts it beyond the reach of most nature enthusiasts. Traditional plant species identification is almost impossible for the general public and challenging even for professionals that deal with botanical problems daily, such as, conservationists, farmers, foresters, and landscape architects. Even for botanists themselves species identification is often a difficult task. The situation is further exacerbated by the increasing shortage of skilled taxonomists [47]. The declining and partly nonexistent taxonomic knowledge within the general public has been termed “taxonomic crisis” [35].

The still existing, but rapidly declining high biodiversity and a limited number of taxonomists represents significant challenges to the future of biological study and conservation. Recently, taxonomists started searching for more efficient methods to meet species identification requirements, such as developing digital image processing and pattern recognition techniques [47]. The rich development and ubiquity of relevant information technologies, such as digital cameras and portable devices, has brought these ideas closer to reality. Digital image processing refers to the use of algorithms and procedures for operations such as image enhancement, image compression, image analysis, mapping, and geo-referencing. The influence and impact of digital images on the modern society is tremendous and is considered a critical component in a variety of application areas including pattern recognition, computer vision, industrial automation, and healthcare industries [131].

Image-based methods are considered a promising approach for species identification [47, 69, 133]. A user can take a picture of a plant in the field with the build-in camera of a mobile device and analyze it with an installed recognition application to identify the species or at least to receive a list of possible species if a single match is impossible. By using a computer-aided plant identification system also non-professionals can take part in this process. Therefore, it is not surprising that large numbers of research studies are devoted to automate the plant species identification process. For instance, ImageCLEF, one of the foremost visual image retrieval campaigns, is hosting a plant identification challenge since 2011. We hypothesize that the interest will further grow in the foreseeable future due to the constant availability of portable devices incorporating myriad precise sensors. These devices provide the basis for more sophisticated ways of guiding and assisting people in species identification. Furthermore, approaching trends and technologies such as augmented reality, data glasses, and 3D-scans give this research topic a long-term perspective.

An image classification process can generally be divided into the following steps (cp. Fig. 1):

-

Image acquisition—The purpose of this step is to obtain the image of a whole plant or its organs so that analysis towards classification can be performed.

-

Preprocessing—The aim of image preprocessing is enhancing image data so that undesired distortions are suppressed and image features that are relevant for further processing are emphasized. The preprocessing sub-process receives an image as input and generates a modified image as output, suitable for the next step, the feature extraction. Preprocessing typically includes operations like image denoising, image content enhancement, and segmentation. These can be applied in parallel or individually, and they may be performed several times until the quality of the image is satisfactory [51, 124].

-

Feature extraction and description—Feature extraction refers to taking measurements, geometric or otherwise, of possibly segmented, meaningful regions in the image. Features are described by a set of numbers that characterize some property of the plant or the plant’s organs captured in the images (aka descriptors) [124].

-

Classification—In the classification step, all extracted features are concatenated into a feature vector, which is then being classified.

The main objectives of this paper are (1) reviewing research done in the field of automated plant species identification using computer vision techniques, (2) to highlight challenges of research, and (3) to motivate greater efforts for solving a range of important, timely, and practical problems. More specifically, we focus on the Image Acquisition and the Feature Extraction and Description step of the discussed process since these are highly influenced by the object type to be classified, i.e., plant species. A detailed analysis of the Preprocessing and the Classification steps is beyond the possibilities of this review. Furthermore, the applied methods within these steps are more generic and mostly independent of the classified object type.

2 Methods

We followed the methodology of a systematic literature review (SLR) to analyze published research in the field of automated plant species identification. Performing a SLR refers to assessing all available research concerning a research subject of interest and to interpret aggregated results of this work. The whole process of the SLR is divided into three fundamental steps: (I) defining research questions, (II) conducting the search process for relevant publications, and (III) extracting necessary data from identified publications to answer the research questions [75, 109].

2.1 Research Questions

We defined the following five research questions:

RQ-1: Data demographics: How are time of publication, venue, and geographical author location distributed across primary studies?—The aim of this question is getting an quantitative overview of the studies and to get an overview about the research groups working on this topic.

RQ-2: Image Acquisition: How many images of how many species were analyzed per primary study, how were these images been acquired, and in which context have they been taken?—Given that the worldwide estimates of flowering plant species (aka angiosperms) vary between 220,000 [90, 125] and 420,000 [52], we would like to know how many species were considered in studies to gain an understanding of the generalizability of results. Furthermore, we are interested in information on where plant material was collected (e.g., fresh material or web images); and whether the whole plant was studied or selected organs.

RQ-3: Feature detection and extraction: Which features were extracted and which techniques were used for feature detection and description?—The aim of this question is categorizing, comparing, and discussing methods for detecting and describing features used in automated plant species classification.

RQ-4: Comparison of studies: Which methods yield the best classification accuracy?—To answer this question, we compare the results of selected primary studies that evaluate their methods on benchmark datasets. The aim of this question is giving an overview of utilized descriptor-classifier combinations and the achieved accuracies in the species identification task.

RQ-5: Prototypical implementation: Is a prototypical implementation of the approach such as a mobile app, a web service, or a desktop application available for evaluation and actual usage?—This question aims to analyzes how ready approaches are to be used by a larger audience, e.g., the general public.

2.2 Data Sources and Selection Strategy

We used a combined backward and forward snowballing strategy for the identification of primary studies (see Fig. 2). This search technique ensures to accumulate a relatively complete census of relevant literature not confined to one research methodology, one set of journals and conferences, or one geographic region. Snowballing requires a starting set of publications, which should either be published in leading journals of the research area or have been cited many times. We identified our starting set of five studies through a manual search on Google Scholar (see Table 1). Google Scholar is a good alternative to avoid bias in favor of a specific publisher in the initial set of the sampling procedure. We then checked whether the publications in the initial set were included in at least one of the following scientific repositories: (a) Thomson Reuters Web of ScienceTM, (b) IEEE Xplore®, (c) ACM Digital Library, and (d) Elsevier ScienceDirect®. Each publication identified in any of the following steps was also checked for being listed in at least one of these repositories to restrict our focus to high quality publications solely.

Backward snowball selection means that we recursively considered the referenced publications in each paper derived through manual search as candidates for our review. Forward snowballing analogously means that we, based on Google Scholar citations, identified additional candidate publications from all those studies that were citing an already included publication. For a candidate to be included in our study, we checked further criteria in addition to being listed in the four repositories. The criteria referred to the paper title, which had to comply to the following pattern:

S1 AND (S2 OR S3 OR S4 OR S5 OR S6) AND NOT (S7) where

-

S1: (plant* OR flower* OR leaf OR leaves OR botan*)

-

S2: (recognition OR recognize OR recognizing OR recognized)

-

S3: (identification OR identify OR identifying OR identified)

-

S4: (classification OR classify OR classifying OR classified)

-

S5: (retrieval OR retrieve OR retrieving OR retrieved)

-

S6: (“image processing” OR “computer vision”)

-

S7: (genetic OR disease* OR “remote sensing” OR gene OR DNA OR RNA).

Using this search string allowed us to handle the large amount of existing work and ensured to search for primary studies focusing mainly on plant identification using computer vision. The next step, was removing studies from the list that had already been examined in a previous backward or forward snowballing iteration. The third step, was removing all studies that were not listed in the four literature repositories listed before. The remaining studies became candidates for our survey and were used for further backward and forward snowballing. Once no new papers were found, neither through backward nor through forward snowballing, the search process was terminated. By this selection process we obtained a candidate list of 187 primary studies.

To consider only high quality peer reviewed papers, we eventually excluded all workshop and symposium papers as well as working notes and short papers with less than four pages. Review papers were also excluded as they constitute no primary studies. To get an overview of the more recent research in the research area, we restricted our focus to the last 10 years and accordingly only included papers published between 2005 and 2015. Eventually, the results presented in this SLR are based upon 120 primary studies complying to all our criteria.

2.3 Data Extraction

To answer RQ-1, corresponding information was extracted mostly from the meta-data of the primary studies. Table 2 shows that the data extracted for addressing RQ-2, RQ-3, RQ-4, and RQ-5 are related to the methodology proposed by a specific study. We carefully analyzed all primary studies and extracted necessary data. We designed a data extraction template used to collect the information in a structured manner (see Table 2). The first author of this review extracted the data and filled them into the template. The second author double-checked all extracted information. The checker discussed disagreements with the extractor. If they failed to reach a consensus, other researchers have been involved to discuss and resolve the disagreements.

2.4 Threats to Validity

The main threats to the validity of this review stem from the following two aspects: study selection bias and possible inaccuracy in data extraction and analysis. The selection of studies depends on the search strategy, the literature sources, the selection criteria, and the quality criteria. As suggested by [109], we used multiple databases for our literature search and provide a clear documentation of the applied search strategy enabling replication of the search at a later stage. Our search strategy included a filter on the publication title in an early step. We used a predefined search string, which ensures that we only search for primary studies that have the main focus on plant species identification using computer vision. Therefore, studies that propose novel computer vision methods in general and evaluating their approach on a plant species identification task as well as studies that used unusual terminology in the publication title may have been excluded by this filter. Furthermore, we have limited ourselves to English-language studies. These studies are only journal and conference papers with a minimum of four pages. However, this strategy excluded non-English papers in national journals and conferences. Furthermore, inclusion of grey literature such as PhD or master theses, technical reports, working notes, and white-papers also workshop and symposium papers might have led to more exhaustive results. Therefore, we may have missed relevant papers. However, the ample list of included studies indicates the width of our search. In addition, workshop papers as well as grey literature is usually finally published on conferences or in journals. Therefore excluding grey literature and workshop papers avoids duplicated primary studies within a literature review. To reduce the threat of inaccurate data extraction, we elaborated a specialized template for data extraction. In addition, all disagreements between extractor and checker of the data were carefully considered and resolved by discussion among the researchers.

3 Results

This section reports aggregated results per research question based on the data extracted from primary studies.

3.1 Data Demographics (RQ-1)

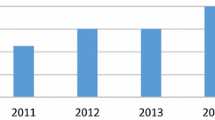

To study the relative interest in automating plant identification over time, we aggregated paper numbers by year of publication (see Fig. 3). The figure shows a continuously increasing interest in this research topic. Especially, the progressively rising numbers of published papers in recent years show that this research topic is considered highly relevant by researchers today.

To gain an overview of active research groups and their geographical distribution, we analyzed the first author’s affiliation. The results depict that the selected papers are written by researchers from 25 different countries. More than half of these papers are from Asian countries (73/120), followed by European countries (26/120), American countries (14/120), Australia (4/120), and African countries (3/120). 34 papers have a first author from China, followed by France (17), and India (13). 15 papers are authored by a group located in two or more different countries. 108 out of the 120 papers are written solely by researches with computer science or engineering background. Only one paper is solely written by an ecologist. Ten papers are written in interdisciplinary groups with researchers from both fields. One paper was written in an interdisciplinary group where the first author has an educational and the second author an engineering background.

3.2 Image Acquisition (RQ-2)

The purpose of this first step within the classification process is obtaining an image of the whole plant or its organs for later analysis towards plant classification.

3.2.1 Studied Plant Organs

Identifying species requires recognizing one or more characteristics of a plant and linking them with a name, either a common or so-called scientific name. Humans typically use one or more of the following characteristics: the plant as a whole (size, shape, etc.), its flowers (color, size, growing position, inflorescence, etc.), its stem (shape, node, outer character, bark pattern, etc.), its fruits (size, color, quality, etc.), and its leaves (shape, margin, pattern, texture, vein etc.) [114].

A majority of primary studies utilizes leaves for discrimination (106 studies). In botany, a leaf is defined as a usually green, flattened, lateral structure attached to a stem and functioning as a principal organ of photosynthesis and transpiration in most plants. It is one of the parts of a plant which collectively constitutes its foliage [44, 123]. Figure 4 shows the main characteristics of leaves with their corresponding botanical terms. Typically, a leaf consists of a blade (i.e., the flat part of a leaf) supported upon a petiole (i.e., the small stalk situated at the lower part of the leaf that joins the blade to the stem), which, continued through the blade as the midrib, gives off woody ribs and veins supporting the cellular texture. A leaf is termed “simple” if its blade is undivided, otherwise it is termed “compound” (i.e., divided into two or more leaflets). Leaflets may be arranged on either side of the rachis in pinnately compound leaves and centered around the base point (the point that joins the blade to the petiole) in palmately compound leaves [44]. Most studies use simple leaves for identification, while 29 studies considered compound leaves in their experiments. The internal shape of the blade is characterized by the presence of vascular tissue called veins, while the global shape can be divided into three main parts: (1) the leaf base, usually the lower 25% of the blade; the insertion point or base point, which is the point that joins the blade to the petiole, situated at its center. (2) The leaf tip, usually the upper 25% of the blade and centered by a sharp point called the apex. (3) The margin, which is the edge of the blade [44]. These local leaf characteristics are often used by botanists in the manual identification task and could also be utilized for an automated classification. However, the majority of existing leaf classification approaches rely on global leaf characteristics, thus ignoring these local information of leaf characteristics. Only eight primary studies consider local characteristics of leaves like the petiole, blade, base, and apex for their research [19, 85, 96, 97, 99, 119, 120, 158]. The characteristics of the leave margin is studied by six primary studies [18, 21, 31, 66, 85, 93].

In contrast to studies on leaves or plant foliage, a smaller number of 13 primary studies identify species solely based on flowers [3, 29, 30, 57, 60, 64, 104, 105, 112, 117, 128, 129, 149]. Some studies did not only focus on the flower region as a whole but also on parts of the flower. Hsu et al. [60] analyzed the color and shape not only of the whole flower region but also of the pistil area. Tan et al. [128] studied the shape of blooming flowers’ petals and [3] proposed analyzing the lip (labellum) region of orchid species. Nilsback and Zisserman [104, 105] propose features, which capture color, texture, and shape of petals as well as their arrangement.

Only one study proposes a multi-organ classification approach [68]. Contrary to other approaches that analyze a single organ captured in one image, their approach analyzes up to five different plant views capturing one or more organs of a plant. These different views are: full plant, flower, leaf (and leaf scan), fruit, and bark. This approach is the only one in this review dealing with multiple images exposing different views of a plant.

3.2.2 Images: Categories and Datasets

Utilized images in the studies fall into three categories: scans, pseudo-scans, and photos. While scan and pseudo-scan categories correspond respectively to plant images obtained through scanning and photography in front of a simple background, the photo category corresponds to plants photographed on natural background [49]. The majority of utilized images in the primary studies are scans and pseudo-scans thereby avoiding to deal with occlusions and overlaps (see Table 3). Only 25 studies used photos that were taken in a natural environment with cluttered backgrounds and reflecting a real-world scenario.

Existing datasets of leaf images were uses in 62 primary studies. The most important (by usage) and publicly available datasets are:

-

Swedish leaf dataset—The Swedish leaf dataset has been captured as part of a joined leaf classification project between the Linkoping University and the Swedish Museum of Natural History [127]. The dataset contains images of isolated leaf scans on plain background of 15 Swedish tree species, with 75 leaves per species (1125 images in total). This dataset is considered very challenging due to its high inter-species similarity [127]. The dataset can be downloaded here: http://www.cvl.isy.liu.se/en/research/datasets/swedish-leaf/.

-

Flavia dataset—This dataset contains 1907 leaf images of 32 different species and 50–77 images per species. Those leaves were sampled on the campus of the Nanjing University and the Sun Yat-Sen arboretum, Nanking, China. Most of them are common plants of the Yangtze Delta, China [144]. The leaf images were acquired by scanners or digital cameras on plain background. The isolated leaf images contain blades only, without petioles (http://flavia.sourceforge.net/).

-

ImageCLEF11 and ImageCLEF12 leaf dataset—This dataset contains 71 tree species of the French Mediterranean area captured in 2011 and further increased to 126 species in 2012. ImageCLEF11 contains 6436 pictures subdivided into three different groups of pictures: scans (48%), scan-like photos or pseudo-scans (14%), and natural photos (38%). The ImageCLEF12 dataset consists of 11,572 images subdivided into: scans (57%), scan-like photos (24%), and natural photos (19%). Both sets can be downloaded from ImageCLEF (2011) and ImageCLEF (2012): http://www.imageclef.org/.

-

Leafsnap dataset—The Leafsnap dataset contains leave images of 185 tree species from the Northeastern United States. The images are acquired from two sources and are accompanied by automatically-generated segmentation data. The first source are 23,147 high-quality lab images of pressed leaves from the Smithsonian collection. These images appear in controlled backlit and front-lit versions, with several samples per species. The second source are 7719 field images taken with mobile devices (mostly iPhones) in outdoor environments. These images vary considerably in sharpness, noise, illumination patterns, shadows, etc. The dataset can be downloaded at: http://leafsnap.com/dataset/.

-

ICL dataset—The ICL dataset contains isolated leaf images of 220 plant species with individual images per species ranging from 26 to 1078 (17,032 images in total). The leaves were collected at Hefei Botanical Garden in Hefei, the capital of the Chinese Anhui province by people from the local Intelligent Computing Laboratory (ICL) at the Institute of Intelligent Machines, China (http://www.intelengine.cn/English/dataset). All the leafstalks have been cut off before the leaves were scanned or photographed on a plain background.

-

Oxford Flower 17 and 102 datasets—Nilsback and Zisserman [104, 105] have created two flower datasets by gathering images from various websites, with some supplementary images taken from their own photographs. Images show species in their natural habitat. The Oxford Flower 17 dataset consists of 17 flower species represented by 80 images each. The dataset contains species that have a very unique visual appearance as well as species with very similar appearance. Images exhibit large variations in viewpoint, scale, and illumination. The flower categories are deliberately chosen to have some ambiguity on each aspect. For example, some classes cannot be distinguished by color alone, others cannot be distinguished by shape alone. The Oxford Flower 102 dataset is larger than the Oxford Flower 17 and consists of 8189 images divided into 102 flower classes. The species chosen consist of flowers commonly occurring in the United Kingdom. Each class consists of between 40 and 258 images. The images are rescaled so that the smallest dimension is 500 pixels. The Oxford Flower 17 dataset is not a full subset of the 102 dataset neither in images nor in species. Both datasets can be downloaded at: http://www.robots.ox.ac.uk/ ~vgg/data/flowers/.

Forty-eight authors use their own, not publicly available, leaf datasets. For these leave images, typically fresh material was collected and photographed or scanned in the lab on plain background. Due to the great effort in collecting material, such datasets are limited both in the number of species and in the number of images per species. Two studies used a combination of self-collected leaf images and images from web resources [74, 138]. Most plant classification approaches only focus on intact plant organs and are not applicable to degraded organs (e.g., deformed, partial, or overlapped) largely existing in nature. Only 21 studies proposed identification approaches that can also handle damaged leaves [24, 38, 46, 48, 56, 58, 74, 93, 102, 132, 141, 143] and overlapped leaves [18–20, 38, 46, 48, 74, 85, 102, 122, 130, 137, 138, 148].

Most utilized flower images were taken by the authors themselves or acquired from web resources [3, 29, 60, 104, 105, 112]. Only one study solely used self-taken photos for flower analysis [57]. Two studies analyzed the Oxford 17 and the Oxford 102 datasets (Table 4).

A majority of primary studies only evaluated their approach on datasets containing less than a hundred species (see Fig. 5) and at most a few thousand leaf images (see Fig. 6). Only two studies used a large dataset with more than 2000 species. Joly et al. [68] used a dataset with 2258 species and 44,810 images. In 2014 this was the plant identification study considering the largest number of species so far. In 2015 [143] published a study with 23,025 species represented by 1,000,000 images in total.

3.3 Feature Detection and Extraction (RQ-3)

Feature extraction is the basis of content-based image classification and typically follows the preprocessing step in the classification process. A digital image is merely a collection of pixels represented as large matrices of integers corresponding to the intensities of colors at different positions in the image [51]. The general purpose of feature extraction is reducing the dimensionality of this information by extracting characteristic patterns. These patterns can be found in colors, textures and shapes [51]. Table 5 shows the studied features, separated for studies analyzing leaves and those analyzing flowers, and highlights that shape plays the most important role among the primary studies. 87 studies used leaf shape and 13 studies used flower shape for plant species identification. The texture of leaves and flowers is analyzed by 24 and 5 studies respectively. Color is mainly considered along with flower analysis (9 studies), but a few studies also used color for leaf analysis (5 studies). In addition, organ-specific features, i.e., leaf vein structure (16 studies) and leaf margin (8 studies), were investigated.

Numerous methods exist in the literature for describing general and domain-specific features and new methods are being proposed regularly. Methods that were used for detecting and extracting features in the primary studies are highlighted in the subsequent sections. Because of perception subjectivity, there does not exist a single best presentation for a given feature. As we will see soon, for any given feature there exist multiple descriptions, which characterize the feature from different perspectives. Furthermore, different features or combinations of different features are often needed to distinguish different categories of plants. For example, whilst leaf shape may be sufficient to distinguish between some species, other species may have very similar leaf shapes to each other, but have different colored leaves or texture patterns. The same is also true for flowers. Flowers with the same color may differ in their shape or texture characteristics. Table 5 shows that 42 studies do not only consider one type of feature but use a combination of two or more feature types for describing leaves or flowers. No single feature may be sufficient to separate all the categories, making feature selection and description a challenging problem. Typically, this is the innovative part of the studies we reviewed. Segmentation and classification also allow for some flexibility, but much more limited. In the following sections, we will give an overview of the main features and their descriptors proposed for automated plant species classification (see also Fig. 7). First, we analyze the description of the general features starting with the most used feature shape, followed by texture, and color and later on we review the description of the organ-specific features leaf vein structure and leaf margin.

3.3.1 Shape

Shape is known as an important clue for humans when identifying real-world objects. A shape measure in general is a quantity, which relates to a particular shape characteristic of an object. An appropriate shape descriptor should be invariant to geometrical transformations, such as, rotation, reflection, scaling, and translation. A plethora of methods for shape representation can be found in the literature [151]. Shape descriptors are classified into two broad categories: contour-based and region-based. Contour-based shape descriptors extract shape features solely from the contour of a shape. In contrast, region-based shape descriptors obtain shape features from the whole region of a shape [72, 151]. In addition, there also exist some methods, which cannot be classified as either contour-based or region-based. In the following section, we restrict our discussion to those techniques that have been applied for plant species identification (see Table 6). We start our discussion with simple and morphological shape descriptors (SMSD) followed by a discussion of more sophisticated descriptors. Since the majority of studies focusses on plant identification via leaves, the discussed shape descriptors mostly apply to leaf shape classification. Techniques which were used for flower analysis will be emphasized.

3.3.2 Simple and Morphological Shape Descriptors

Across the studies we found six basic shape descriptors used for leaf analysis (see first six rows of Table 7). These refer to basic geometric properties of the leaf’s shape, i.e., diameter, major axis length, minor axis length, area, perimeter, centroid (see, e.g., [144]). On top of that, studies compute and utilize morphological descriptors based on these basic descriptors, e.g., aspect ratio, rectangularity measures, circularity measures, and the perimeter to area ratio (see Table 6). Table 6 shows that studies often employ ratios as shape descriptors. Ratios are simple to compute and naturally invariant to translation, rotation, and scaling; making them robust against different representations of the same object (aka leaf). In addition, several studies proposed more leaf-specific descriptors. For example, [58] introduce a leaf width factor (LWF), which is extracted from leaves by slicing across the major axis and parallel to the minor axis. Then, the LWF per strip is calculated as the ratio of the width of the strip to the length of the entire leaf (major axis length). Yanikoglu et al. [148] propose an area width factor (AWF) constituting a slight variation of the LWF. For AWF, the area of each strip normalized by the global area is computed. As another example, [116] used a porosity feature to explain cracks in the leaf image (Table 7).

However, while there typically exists high morphological variation across different species’ leaves, there is also often considerable variance among leaves of the same species. Studies’ results show that SMSD are too much simplified to discriminate leaves beyond those with large differences sufficiently. Therefore, they are usually combined with other descriptors, e.g., more complex shape analysis [1, 15, 40, 72, 73, 106, 110, 137, 146], leaf texture analysis [154], vein analysis [5, 144], color analysis [16, 116], or all of them together [43, 48]. SMSD are usually employed for high-level discrimination reducing the search space to a smaller set of species without losing relevant information and allowing to perform computationally more expensive operations at a later stage on a smaller search space [15].

Similarly, SMSD play an important role for flower analysis. Tan et al. [129] propose four flower shape descriptors, namely, area, perimeter of the flower, roundness of the flower, and aspect ratio. A simple scaling and normalization procedure has been employed to make the descriptors invariant to varying capture situations. The roundness measure and aspect ratio in combination with more complex shape analysis descriptors are used by [3] for analyzing flower shape.

In conclusion, the risk of SMSD is that any attempt to describe the shape of a leaf using only 5–10 descriptors may oversimplify matters to the extent that meaningful analysis becomes impossible, even if they seem sufficient to classify a small set of test images. Furthermore, many single-value descriptors are highly correlated with each other, making the task of choosing sufficiently independent features to distinguish categories of interest especially difficult [33].

3.3.3 Region-Based Shape Descriptors

Region-based techniques take all the pixels within a shape region into account to obtain the shape representation, rather than only using boundary information as the contour-based methods do. In this section, we discuss the most popular region-based descriptors for plant species identification: image moments and local feature techniques.

Image moments. Image moments are a widely applied category of descriptors in object classification. Image moments are statistical descriptors of a shape that are invariant to translation, rotation, and scale. Hu [61] proposes seven image moments, typically called geometric moments or Hu moments that attracted wide attention in computer vision research. Geometric moments are computationally simple, but highly sensitive to noise. Among our primary studies, geometric moments have been used for leaf analysis [22, 23, 40, 65, 72, 73, 102, 110, 137, 138, 154] as well as for flower analysis [3, 29]. Geometric moments as a standalone feature are only studied by [102]. Most studies combine geometric moments with the previously discussed SMSD [3, 23, 40, 72, 73, 110, 137, 154]. Also the more evolved Zernike moment invariant (ZMI) and Legendre moment invariant (LMI), based on an orthogonal polynomial basis, have been studied for leaf analysis [72, 138, 159]. These moments are also invariant to arbitrary rotation of the object, but in contrast to geometric moments they are not sensitive to image noise. However, their computational complexity is very high. Kadir et al. [72] found ZMI not to yield better classification accuracy than geometric moments. Zulkifli et al. [159] compare three moment invariant techniques, ZMI, LMI, and moments of discrete orthogonal basis (aka Tchebichef moment invariant (TMI)) to determine the most effective technique in extracting features from leaf images. In result, the authors identified TMI as the most effective descriptor. Also [106] report that TMI achieved the best results compared with geometric moments and ZMI and were therefore used as supplementary features with lower weight in their classification approach.

Local feature techniques. In general, the concept of local features refers to the selection of scale-invariant keypoints (aka interest points) in an image and their extraction into local descriptors per keypoint. These keypoints can then be compared with those obtained from another image. A high degree of matching keypoints among two images indicates similarity among them. The seminal Scale-invariant feature transform (SIFT) approach has been proposed by [86]. SIFT combines a feature detector and an extractor. Features detected and extracted using the SIFT algorithm are invariant to image scale, rotation, and are partially robust to changing viewpoints and changes in illumination. The invariance and robustness of the features extracted using this algorithm makes it also suitable for object recognition rather than image comparison.

SIFT has been proposed and studied for leaf analysis by [26, 27, 59, 81]. A challenge that arises for object classification rather than image comparison is the creation of a codebook with trained generic keypoints. The classification framework by [26] combines SIFT with the Bag of Words (BoW) model. The BoW model is used to reduce the high dimensionality of the data space. Hsiao et al. [59] used SIFT in combination with sparse representation (aka sparse coding) and compared their results to the BoW approach. The authors argue that in contrast to the BoW approach, their sparse coding approach has a major advantage as no re-training of the classifiers for newly added leaf image classes is necessary. In [81], SIFT is used to detect corners for classification. Wang et al. [139] propose to improve leaf image classification by utilizing shape context (see below) and SIFT descriptors in combination so that both global and local properties of a shape can be taken into account. Similarly, [74] combines SIFT with global shape descriptors (high curvature points on the contour after chain coding). The author found the SIFT method by itself not successful at all and its accuracy significantly lower compared to the results obtained by combining it with global shape features. The original SIFT approach as well as all so far discussed SIFT approaches solely operate on grayscale images. A major challenge in leaf analysis using SIFT is often a lack of characteristic keypoints due to the leaves’ rather uniform texture. Using colored SIFT (CSIFT) can address this problem and will be discussed later in the section about color descriptors.

Another substantially studied local feature approach is the histogram of oriented gradients (HOG) descriptor [41, 111, 145, 155]. The HOG descriptor, introduced by [86] is similar to SIFT, except that it uses an overlapping local contrast normalization across neighboring cells grouped into a block. Since HOG computes histograms of all image cells and there are even overlap cells between neighbor blocks, it contains much redundant information making dimensionality reduction inevitably for further extraction of discriminant features. Therefore, the main focus of studies using HOG lies on dimensionality reduction methods. Pham et al. [111], Xiao et al. [145] study the maximum margin criterion (MMC), [41] studies principle component analysis (PCA) with linear discriminant analysis (LDA), and [155] introduce attribute-reduction based on neighborhood rough sets. Pham et al. [111] compared HOG features with Hu moments and the obtained results show that HOG is more robust than Hu moments for species classification. Xiao et al. [145] found that HOG-MMC achieves a better accuracy than the inner-distance shape context (IDSC) (will be introduced in the section about contour based shape descriptors), when leaf petiole were cut off before analysis. A disadvantage of the HOG descriptor is its sensitivity to the leaf petiole orientation while the petiole’s shape actually carrying species characteristics. To address this issue, a pre-processing step can normalize petiole orientation of all images in a dataset making them accessible to HOG [41, 155].

Nguyen et al. [103] studied speeded up robust features (SURF) for leaf classification, which was first introduced by [9]. The SURF algorithm follows the same principles and procedure as SIFT. However, details per step are different. The standard version of SURF is several times faster than SIFT and claimed by its authors to be more robust against image transformations than SIFT [9]. To reduce dimensionality of extracted features, [103] apply the previously mentioned BoW model and compared their results with those of [111]. SURF was found to provide better classification results than HOG [111].

Ren et al. [121] propose a method for building leaf image descriptors by using multi-scale local binary patterns (LBP). Initially, a multi-scale pyramid is employed to improve leaf data utilization and each training image is divided into several overlapping blocks to extract LBP histograms in each scale. Then, the dimension of LBP features is reduced by a PCA. The authors found that the extracted multi-scale overlapped block LBP descriptor can provide a compact and discriminative leaf representation.

Local features have also been studied for flower analysis. Nilsback and Zisserman [104], Zawbaa et al. [149] used SIFT on a regular grid to describe shapes of flowers. Nilsback and Zisserman [105] proposed to sample HOG and SIFT on both, the foreground and its boundary. The authors found SIFT descriptors extracted from the foreground to perform best, followed by HOG, and finally SIFT extracted from the boundary of a flower shape. Combining foreground SIFT with boundary SIFT descriptors further improved the classification results.

Qi et al. [117] studied dense SIFT (DSIFT) features to describe flower shape. DSIFT is another SIFT-like feature descriptor. It densely selects points evenly in the image, on each pixel or on each n-pixels, rather than performing salient point detection, which make it strong in capturing all features in an image. But DSIFT is not scale-invariant, to make it adaptable to changes in scale, local features are sampled by different scale patches within an image [84]. Unlike the work of [104, 105], [117] take the full image as input instead of a segmented image, which means that extended background greenery may affect their classification performance to some extent. However, the results of [117] are comparable to the results of [104, 105]. When considering segmentation and complexity of descriptor as factors, the authors even claim that their method facilitates more accurate classification and performs more efficiently than the previous approaches.

3.3.4 Contour-Based Shape Descriptors

Contour-based descriptors solely consider the boundary of a shape and neglect the information contained in the shape interior. A contour-based descriptor for a shape is a sequence of values calculated at points taken around an object’s outline, beginning at some starting point and tracing the outline in either a clockwise or an anti-clockwise direction. In this section, we discuss popular contour-based descriptors namely shape signatures, shape context approaches, scale space, the Fourier descriptor, and fractal dimensions.

Shape signatures. Shape signatures are frequently used contour-based shape descriptors, which represent a shape by an one dimensional function derived from shape contour points. There exists a variety of shape signatures. We found the centroid contour distance (CCD) to be the most studied shape signature for leaf analysis [10, 28, 46, 130] and flower analysis [3, 57]. The CCD descriptor consists of a sequence of distances between the center of the shape and points on the contour of a shape. Other descriptors consist of a sequence of angles to represent the shape, e.g., the centroid-angle (AC) [10, 46] and the tangential angle (AT) [6]. A comparison between CCD and AC sequences performed by [46] demonstrated that CCD sequences are more informative than AC sequences. This observation is intuitive since the CCD distance includes both global information related to the leaf area and shape as well as local information related to contour details. Therefore, when combining CCD and AC, which is expected to further improve classification performance, the CCD should be emphasized by giving it a higher classification weight [46].

Mouine et al. [92] investigate two multi-scale triangular approaches for leaf shape description: the well-known triangle area representation (TAR) and the triangle side length representation (TSL). The TAR descriptor is computed based on the area of triangles formed by points on the shape contour. TAR provides information about shape properties, such as the convexity or concavity at each contour point of the shape, and provides high discrimination capability. Although TAR is affine-invariant and robust to noise and deformation, it has a high computational cost since all the contour points are used. Moreover, TAR has two major limitations: (a) the area is not informative about the type of the considered triangle (isosceles, equilateral, etc.), which may be crucial for a local description of the contour. (b) The area is not accurate enough to represent the shape of a triangle [94]. The TSL descriptor is computed based on the side lengths rather than the area of a triangle. TSL is invariant under scale, translation, rotation, and reflection around contour points. Studies found TSL to provide yield higher classification accuracy than TAR [92, 94]. The authors argue that this result may be due to the fact that using side lengths to represent a triangle is more accurate than using its area. In addition to the two multi-scale triangular approaches, [94] also proposed two representations that they denote triangle oriented angles (TOA) and triangle side lengths and angle representation (TSLA). TOA solely uses angle values to represent a triangle. Angle orientation provides information about local concavities and convexities. In fact, an obtuse angle means convex, an acute angle means concave. TOA is not invariant under reflection around the contour point: only similar triangles having equal angles will have equal TOA values. TSLA is a multi-scale triangular contour descriptor that describes the triangles by their lengths and angle. Like TSL, the TSLA descriptor is invariant under scale and reflection around the contour points. The authors found that the angular information provides a more precise description when being jointly used with triangle side lengths (i.e., TSL) [94].

A disadvantage of shape signatures for leaf and flower analysis is the high matching cost, which is too high for online retrieval. Furthermore, shape signatures are sensitive to noise and changes in the contour. Therefore, it is undesirable to directly describe a shape using a shape signature. On the other hand, further processing can increase its robustness and reduce the matching load. For example, a shape signature can be simplified by quantizing a contour into a contour histogram, which is then rotationally invariant [151]. For example, an angle code histogram (ACH) has been used instead of AC by [148]. However, the authors did not compare AC against ACH.

Shape context approaches. Beyond the CCD and AC descriptors discussed before, there are other alternative methods that intensively elaborate a shape’s contour to extract useful information. Belongie et al. [12] proposed a shape descriptor, called shape context (SC), that represent log-polar histograms of contour distribution. A contour is resampled to a fixed number of points. In each of these points, a histogram is computed such that each bin counts the number of sampled contour points that fall into its space. In other words, each contour point is described by a histogram in the context of the entire shape. Descriptors computed in similar points on similar shapes will provide close histograms. However, articulation (e.g., relative pose of the petiole or the position of the blade) results in significant variation of the calculated SC. In order to obtain articulation invariance, [83] replaced the Euclidean distance and relative angles by inner-distances and inner-angles. The resulting 2D histogram, called inner-distance shape context (IDSC), was reported to perform better than many other descriptors in leaf analysis [11]. It is robust to the orientation of the footstalk, but at the cost of being a shape descriptor that is extensive in size and expensive in computational cost. For example, [139] does not employ IDSC due to its expensive computational cost.

Hu et al. [62] propose a contour-based shape descriptor named multi-scale distance matrix (MDM) to capture the geometric structure of a shape, while being invariant to translation, rotation, scaling, and bilateral symmetry. The approach can use Euclidean distances as well as inner distances. MDM is considered a most effective method since it avoids the use of dynamic programming for building the point-wise correspondence. Compared to other contour-based approaches, such as SC and IDSC, MDM can achieve comparable recognition performance while being more computationally efficient [62]. Although MDM effectively describes the broad shape of a leaf, it fails in capturing details, such as leaf margin. Therefore, [73] proposed a method that combines contour (MDM), margin (average margin distance (AMD), margin statistics (MS)), SMSD and Hu moments and demonstrated higher classification accuracy than reached by using MDM and SMSD with Hu moments alone.

Zhao et al. [158] made two observations concerning shape context approaches. First, IDSC cannot model local details of leaf shapes sufficiently, because it is calculated based on all contour points in a hybrid way so that global information dominates the calculation. As a result, two different leaves with similar global shape but different local details tend to be misclassified as the same species. Second, the point matching framework of generic shape classification methods does not work well for compound leaves since their local details are hard to be matched in pairs. To solve this problem, [158] proposed an independent-IDSC (I-IDSC) feature. Instead of calculating global and local information in a hybrid way, I-IDSC calculates them independently so that different aspects of a leaf shape can be examined individually. The authors argue that compared to IDSC [11, 83] and MDM [62], the advantage of I-IDSC is threefold: (1) it discriminates leaves with similar overall shape but different margins and vice versa; (2) it accurately classifies both simple and compound leaves; and (3) it only keeps the most discriminative information and can thus be more efficiently computed [158].

Wang et al. [134, 135] developed a multi scale-arch-height descriptor (MARCH), which is constructed based on the concave and convex measures of arches of various levels. This method extracts hierarchical arch height features at different chord spans from each contour point to provide a compact, multi-scale shape descriptor. The authors claim that MARCH has the following properties: invariant to image scale and rotation, compactness, low computational complexity, and coarse-to-fine representation structure. The performance of the proposed method has been evaluated and demonstrated to be superior to IDSC and TAR [134, 135].

Scale space analysis. A rich representation of a shape’s contour is the curvature-scale space (CSS). It piles up curvature measures at each point of the contour over successive smoothing scales, summing up the information into a map where concavities and convexities clearly appear, as well as the relative scale up to which they persist [151]. Florindo et al. [45] propose an approach to leaf shape identification based on curvature complexity analysis (fractal dimension based on curvature). By using CSS, a curve describing the complexity of the shape can be computed and theoretically be used as descriptor. Studies found the technique to be superior to traditional shape analysis methods like FD, Zernike moments, and multi-scale fractal dimension [45]. However, while CSS is a powerful description it is too informative to be used as a descriptor. The implementation and matching of CSS is very complex. Curvature has also been used to detect dominant points (points of interest or characteristic points) on the contour, and provides a compact description of a contour by its curvature optima. Studies select this characteristic or the most prominent points based on the graph of curvature values of the contour as descriptor [15, 18]. Lavania and Matey [81] use mean projection transform (MPT) to extract corner candidates by selecting only candidates that have high curvature (contour-based edge detection). Kebapci et al. [74] extract high curvature points on the contour by analyzing direction changes in the chain code. They represent the contour as a chain code, which is a series of enumerated direction codes. These points (aka codes) are labeled as convex or concave depending on their position and direction (or curvature of the contour). Kumar et al. [76] suggest a leaf classification method using so-called histograms of curvature over scale (HoCS). HoCS are built from CSS by creating histograms of curvature values over different scales. One limitation of the HoCS method is that it is not articulation-invariant, i.e., that a change caused by the articulation either between the blade and petiole of a simple leaf, or among the leaflets of a compound leaf can cause significant changes to the calculated HoCS feature. Therefore, it needs special treatment of leaf petioles and the authors suggest to detect and remove the petiole before classification. Chen et al. [28] used a simplified curvature of the leaf contour, called velocity. The results showed that the velocity algorithms were faster at finding contour shape characteristics and more reasonable in their characteristic matching than CSS. Laga et al. [77] study the performance of the squared root velocity function (SRVF) representation of closed planar curves for the analysis of leaf shapes and compared it to IDSC, SC, and MDM. SRVF significantly outperformed the previous shape-based techniques. Among the lower performing techniques in this study, SC and MDM performed equally, IDCS achieved the lowest performance.

Fourier descriptors. Fourier descriptors (FD) are a classical method for shape recognition and have grown into a general method to encode various shape signatures. By applying a Fourier transform, a leaf shape can be analyzed in the frequency domain, rather than the spatial domain as done with shape signatures. A set number of Fourier harmonics are calculated for the outline of an object, each consisting of only four coefficients. These Fourier descriptors capture global shape features in the low frequency terms (low number of harmonics) and finer features of the shape in the higher frequency terms (higher numbers of harmonics). The advantages of this method are that it is easy to implement and that it is based on the well-known theory of Fourier analysis [33]. FD can easily be normalized to represent shapes independently of their orientation, size, and location; thus easing comparison between shapes. However, a disadvantage of FDs is that they do not provide local shape information since this information is distributed across all coefficients after the transformation [151]. A number of studies focused on FD, e.g., [147] use FD computed on distances of contour points from the centroid, which is advantageous for smaller datasets. Kadir et al. [72] propose a descriptor based on polar Fourier transform (PFT) to extract the shape of leaves and compared it with SMSD, Hu, and Zernike moments. Among those methods, PFT achieved the most prospective classification result. Aakif and Khan [1], Yanikoglu et al. [148] used FD in combination with SMSD. The authors obtained more accurate classification results by using FD than with SMSD alone. However, they achieved the best result by combining all descriptors. Novotny and Suk [106] used FD in combination with TMI and major axis length. Several studies that propose novel methods for leaf shape analysis benchmark their descriptor against FD in order to prove effectiveness [45, 62, 134, 147, 158].

Fractal dimension. The fractal dimension (FracDim) of an object is a real number used to represent how completely a shape fills the dimensional space to which it belongs. The FracDim descriptor can provide a useful measure of a leaf shape’s complexity. In theory, measuring the fractal dimension of leaves or flowers can quantitatively describe and classify even morphologically complex plants. Only a few studies used FracDim for leaf analysis [14, 65, 67] and flower analysis [3]. Bruno et al. [14] compare box-counting and multi-scale Minkowski estimates of fractal dimension. Although the box-counting method provided satisfactory results, Minkowski’s multi-scale approach proved superior in terms of characterizing plant species. Given the wide variety of leaf and flower shapes, characterizing their shape by a single value descriptor of complexity likely discards useful information, suggesting that the FracDim descriptors may only be useful in combination with other descriptors. For example, [65] demonstrated that leaf analysis with FracDim descriptors are effective and yield higher classification rates than Hu moments. When combining both, even better results were achieved. One step further, [14, 65, 67] proposed methods for combining the FD descriptor of a leaf’s shape with a FracDim descriptor computed on the venation of the leaf to rise classification performance (further details in the section about vein feature).

3.3.5 Color

Color is an important feature of images. Color properties are defined within a particular color space. A number of color spaces have been applied across the primary studies, such as red-green-blue (RGB), hue-saturation-value (HSV), hue-saturation-intensity (HSI), hue-max-min-diff (HMMD), LUV (aka CIELUV), and more recently Lab (aka CIELAB). Once a color space is specified, color properties can be extracted from images or regions. A number of general color descriptors have been proposed in the field of image recognition, e.g., color moments (CM), color histograms (CH), color coherence vector, and color correlogram [153]. CM are a rather simple descriptor, the common moments being mean, standard deviation, skewness, and kurtosis. CM are used for characterizing planar color patterns, irrespective of viewpoint or illumination conditions and without the need for object contour detection. CM is known for its low dimension and low computational complexity, thus, making it convenient for real-time applications. CH describes the color distribution of an image. It quantizes a color space into different bins and counts the frequency of pixels belonging to each color bin. This descriptor is robust to translation and rotation. However, CH does not encode spatial information about the color distribution. Therefore, visually different images can have similar CH. In addition, a histogram is usually of high dimensionality [153]. A major challenge for color analysis is light variations due to different intensity and color of the light falling from different angles. These changes in illumination can cause shadowing effects and intensity changes. For species classification the most studied descriptors are CM [16, 43, 48, 87, 116, 148] and CH [3, 16, 29, 57, 74, 87, 104, 105, 112, 148]. An overview of all primary studies that analyze color is shown in Table 8.

Leaf analysis. Only 8 of 106 studies applying leaf analysis also study color descriptors. We always found color descriptors being jointly studied together with leaf shape descriptors. Kebapci et al. [74] use three different color spaces to produce CH and color co-occurrence matrices (CCM) for assessing the similarity between two images; namely RGB, normalized RGB (nRGB), and HSI, where nRGB-CH facilitated the best results. Yanikoglu et al. [148] studied the effectiveness of color descriptors, specifically the RGB histogram and CM. The authors found CM to provide the most accurate results. However, the authors also found that color information did not contribute to the classification accuracy when combined with shape and texture descriptors. Caglayan et al. [16] defined different sets of color features. The first set consisted of mean and standard deviation of intensity values of the red, the green, and the blue channel and an average of these channels. The second set consisted of CH in red, green, and blue channels. The authors found the first four CM to be an efficient and effective way for representing color distribution of leaf images [43, 48, 116, 148]. Che Hussin et al. [27] proposed a grid-based CM as descriptor. Each image is divided into a 3x3 grid, then each cell is described by mean, standard deviation, and the third root of the skewness. In contrast, [87] evaluated the first three central moments, which they found to be not discriminative according their experimental results.

Flower analysis. Color plays a more important role for flower analysis than for leaf analysis. We found that 9 out of 13 studies on flower analysis use color descriptors. However, using color information solely, without considering flower shape features, cannot classify flowers effectively [104, 105]. Flowers are often transparent to some degree, i.e., that the perceived color of a flower differs depending on whether the light comes from behind or in front of the flower. Since flower images are taken under different environmental conditions, the variation in illumination is greatly affecting analysis results [126]. To deal with this problem, [3, 30, 57, 60] converted their images from the RGB color space into the HSV space and discarded the illumination (V) component. Apriyanti et al. [3] studied discrimination power of features for flower images and identified the following relation from the highest to the lowest: CCD (shape), HSV color, and geometric moments (shape). Hsu et al. [60] found that color features have more discriminating ability than the center distance sequence and the roundness shape features. Qi et al. [117] study a method where they select local keypoints with colored SIFT (CSIFT). CSIFT is a SIFT-like descriptor that builds on a color invariants. It employs the same strategy as SIFT for building descriptors. The local gradient-orientation histograms for the same-scale neighboring pixels of a keypoint are used as descriptor. All orientations are assigned relative to a dominant orientation of the keypoint. Thus, the built descriptor is invariant to the global object orientation and is stable to occlusion, partial appearance, and cluttered surroundings due to the local description of keypoints. As CSIFT uses color invariants for building the descriptor, it is robust to photometric changes [2]. Qi et al. [117] even found the performance of CSIFT to be superior over SIFT.

3.3.6 Texture

Texture is the term used to characterize the surface of a given object or phenomenon and is undoubtedly a main feature used in computer vision and pattern recognition [142, 153]. Generally, texture is associated to the feel of different materials to human touch. Texture image analysis is based on visual interpretation of this feeling. Compared to color, which is usually a pixel property, texture can only be assessed for a group of pixels [153]. Grayscale texture analysis methods are generally grouped into four categories: signal processing methods based on a spectral transform, such as, Fourier descriptors (FD) and Gabor filters (GF); statistical methods that explore the spatial distribution of pixels, e.g., co-occurrence matrices; structural methods that represent texture by primitives and rules; and model-based methods based on fractal and stochastic models. However, some recently proposed methods cannot be classified into these four categories. For instance, methods based on deterministic walks, fractal dimension, complex networks, and gravitational models [37]. An overview of all primary studies that analyze texture is shown in Table 9.

Leaf analysis. For leaf analysis, twelve studies analyzed texture solely and another twelve studies combined texture with other features, i.e., shape, color, and vein. The most frequently studied texture descriptors for leaf analysis are Gabor filter (GF) [17, 23, 32, 74, 132, 150], fractal dimensions (FracDim) [7, 8, 36, 37], and gray level co-occurrence matrix (GLCM) [23, 32, 43, 48].

GF are a group of wavelets, with each wavelet capturing energy at a specific frequency and in a specific direction. Expanding a signal provides a localized frequency description, thereby capturing the local features and energy of the signal. Texture features can then be extracted from this group of energy distributions. GF has been widely adopted to extract texture features from images and has been demonstrated to be very efficient in doing so [152]. Casanova et al. [17] applied GF on sample windows of leaf lamina without main venation and leaf margins. They observed a higher performance of GF than other traditional texture analysis methods such as FD and GLCM. Chaki et al. [23], Cope et al. [32] combined banks of GF and computed a series of GLCM based on individual results. The authors found the performance of their approach to be superior to standalone GF and GLCM. Yanikoglu et al. [148] used GF and HOG for texture analysis and found GF to have a higher discriminatory power. Venkatesh and Raghavendra [132] proposed a new feature extraction scheme termed local Gabor phase quantization (LGPQ), which can be viewed as the combination of GF with a local phase quantization scheme. In a comparative analysis the proposed method outperformed GF as well as the local binary pattern (LBP) descriptor.

Natural textures like leaf surfaces do not show detectable quasi-periodic structures but rather have random persistent patterns [63]. Therefore, several authors claim fractal theory to be better suited than statistical, spectral, and structural approaches for describing these natural textures. Authors found the volumetric fractal dimension (FracDim) to be very discriminative for the classification of leaf textures [8, 122]. Backes and Bruno [7] applied multi-scale volumetric FracDim for leaf texture analysis. de M Sa Junior et al. [36, 37] propose a method combining gravitational models with FracDim and lacunarity (counterpart to the FracDim that describes the texture of a fractal) and found it to outperform FD, GLCM, and GF.

Surface gradients and venation have also been exploited using the edge orientation histogram descriptor (EOH) [10, 10, 91, 148]. Here the orientations of edge gradients are used to analyze the macro-texture of the leaf. In order to exploit the venation structure, [25] propose the EAGLE descriptor for characterizing leaf edge patterns within a spatial context. EAGLE exploits the vascular structure of a leaf within a spatial context, where the edge patterns among neighboring regions characterize the overall venation structure and are represented in a histogram of angular relationships. In combination with SURF, the studied descriptors are able to characterize both local gradient and venation patterns formed by surrounding edges.

Elhariri et al. [43] studied first and second order statistical properties of texture. First order statistical properties are: average intensity, average contrast, smoothness, intensity histogram’s skewness, uniformity, and entropy of grayscale intensity histograms (GIH). Second order statistics (aka statistics from GLCM) are well known for texture analysis and are defined over an image to be the distribution of co-occurring values at a given offset [55]. The authors found that the use of first and second order statistical properties of texture improved classification accuracy compared to using first order statistical properties of texture alone. Ghasab et al. [48] derive statistics from GLCM, named contrast, correlation, energy, homogeneity, and entropy and combined them with shape, color, and vein features. Wang et al. [136] used dual-scale decomposition and local binary descriptors (DS-LBP). DS-LBP descriptors effectively combine texture and contour of a leaf and are invariant to translation and rotation.

Flower analysis. Texture analysis also plays an important role for flower analysis. Five of the 13 studies analyze the texture of flowers, whereby texture is always analyzed in combination with shape or color. Nilsback and Zisserman [104, 105] describe the texture of flowers by convolving the images with a Leung-Malik (MR) filter bank. The filter bank contains filters with multiple orientations. Zawbaa et al. [149] propose the segmentation-based fractal texture analysis (SFTA) to analyze the texture of flowers. SFTA breaks the input image into a set of binary images from which region boundaries’ FracDim are calculated and segmented texture patterns are extracted.

3.3.7 Leaf-Specific Features

Leaf venation. Veins provide leaves with structure and a transport mechanism for water, minerals, sugars, and other substances. Leaf veins can be, e.g., parallel, palmate, or pinnate. The vein structure of a leaf is unique to a species. Due to a high contrast compared to the rest of the leaf blade, veins are often clearly visible. Analyzing leaf vein structure, also referred to as leaf venation, has been proposed in 16 studies (see Table 10).

Only four studies solely analyzed venation as a feature discarding any other leaf features, like, shape, size, color, and texture [53, 78–80]. Larese et al. [78–80] introduced a framework for identifying three legumes species on the basis of leaf vein features. The authors computed 52 measures per leaf patch (e.g., the total number of edges, the total number of nodes, the total network length, median/min/max vein length, median/min/max vein width). Larese et al. [80] defines and discusses each measure. The author [80] performed an experiment using images that were cleared using a chemical process (enhancing high contrast leaf veins and higher orders of visible veins), which increased their accuracy from 84.1 to 88.4% compared to uncleared images at the expense of time and cost for clearing. Gu et al. [53] processed the vein structure using a series of wavelet transforms and Gaussian interpolation to extract a leaf skeleton that was then used to calculate a number of run-length features. A run-length feature is a set of consecutive pixels with the same gray level, collinear in a given direction, and constituting a gray level run. The run length is the number of pixels in the run and the run length value is the number of times such a run occurs in an image. The authors obtained a classification accuracy of 91.2% on a 20 species dataset.

Twelve studies analyzed venation in combination with the shape of leaves [4, 5, 14, 65, 67, 101, 107, 108, 139, 144] and two studies analyzed venation in combination with shape, texture, and color [43, 48]. Nam et al. [101], Park et al. [107, 108] extract structure features in order to categorize venation patterns. Park et al. [107, 108] propose a leaf image retrieval scheme, which analyzes the venation of a leaf sketch drawn by the user. Using the curvature scale scope corner detection method on the venation drawing they categorize the density of feature points (end points and branch points) by using non-parametric estimation density. By extracting and representing these venation types, they could improve the classification accuracy from 25 to 50%. Nam et al. [101] performed classification on graph representations of veins and combined it with modified minimum perimeter polygons as shape descriptor. The authors found their method to yield better results than CSS, CCD, and FD. Four groups of researchers [5, 43, 48, 144] studied the ratio of vein-area (number of pixels that represent venation) and leaf-area (\(A_{vein}/ A_{leaf}\)) after morphological opening. Elhariri et al. [43], Ghasab et al. [48] found that using a combination of all features (vein, shape, color, and texture) yielded the highest classification accuracy. Wang et al. [139] used SC and SIFT extracted from contour and vein sample points. They noticed that vein patterns are not always helpful for SC based classification. Since in their experiments, vein extraction based on simple Canny edge detection generated noisy outputs utilizing the resulting vein patterns in shape context led to unstable classification performance. The authors claim that this problem can be remedied with advanced vein extraction algorithms [139]. Bruno et al. [14], Ji-Xiang et al. [65] and Jobin et al. [67] studied FracDim extracted from the venation and the outline of leafs and obtained promising results. Bruno et al. [14] argues that the segmentation of a leaf venation system is a complex task, mainly due to low contrast between the venation and the rest of the leaf blade structure. The authors propose a methodology divided into two stages: (i) chemical leaf clarification, and (ii) segmentation by computer vision techniques. Initially, the fresh leaf collected in the herbarium, underwent a chemical process of clarification. The purpose was removing the genuine leaf pigmentation. Then, the fresh leaves were digitalized by a scanner. Ji-Xiang et al. [65], Jobin et al. [67] did not use any chemical or biological procedure to physically enhance the leaf veins. They obtained a classification accuracy of 87% on a 30 species dataset and 84% on a 50 species dataset, respectively.

Leaf margin. All leaves exhibit margins (leaf blade edges) that are either serrated or unserrated. Serrated leaves have teeth, while unserrated leaves have no teeth and are described as being smooth. These margin features are very useful for botanists when describing leaves, with typical descriptions including details such as the tooth spacing, number per centimeter, and qualitative descriptions of their flanks (e.g., convex or concave). Leaf margin has seen little use in automated species identification with 8 out of 106 studies focusing on it (see Table 10). Studies usually combine margin analysis with shape analyses [18, 20, 21, 73, 85, 93]. Two studies used margin as sole feature for analysis [31, 66].

Jin et al. [66] propose a method based on morphological measurements of leaf tooth, discarding leaf shape, venation, and texture. The studied morphological measurements are the total number of teeth, the ratio between the number of teeth and the length of the leaf margin expressed in pixels, leaf-sharpness, and leaf-obliqueness. Leaf-sharpness, is measured per tooth as an acute triangle obtained by connecting the top edge and two bottom edges of the leaf tooth. Thus, for a leaf image, many triangles corresponding to leaf teeth are obtained. In their method, the acute angle for each leaf tooth is exploited as a measure for plant identification. The proposed method achieves an average classification rate of around 76% for the eight studied species. Cope and Remagnino [31] extracts a margin signature based on the leaf’s insertion point and apex. A classification accuracy of 91% was achieved on a larger dataset containing 100 species. The authors argue that accurate identification of insertion point and apex may also be useful when considering other leaf features, e.g., venation. Two shape context based descriptors have been presented and combined for plant species identification by [93]. The first one gives a description of the leaf margin. The second one computes the spatial relations between the salient points and the leaf contour points. Results show that a combination of margin and shape improved classification performance in contrast to using them as separate features. Kalyoncu and Toygar [73] use margin statistics over margin peaks, i.e., average peak height, peak height variance, average peak distance, and peak distance variance, to describe leave margins and combined it with simple shape descriptors, i.e., Hu moments and MDM. In [18, 20], contour properties are investigated utilizing a CSS representation. Potential teeth are explicitly extracted and described and the margin is then classified into a set of inferred shape classes. These descriptors are combined base and apex shape descriptors. Cerutti et al. [21] introduces a sequence representation of leaf margins where teeth are viewed as symbols of a multivariate real valued alphabet. In all five studies [18, 20, 21, 73, 85] combining shape and margin features improved classification results in contrast to analyzing the features separately.

3.4 Comparison of Studies (RQ-4)